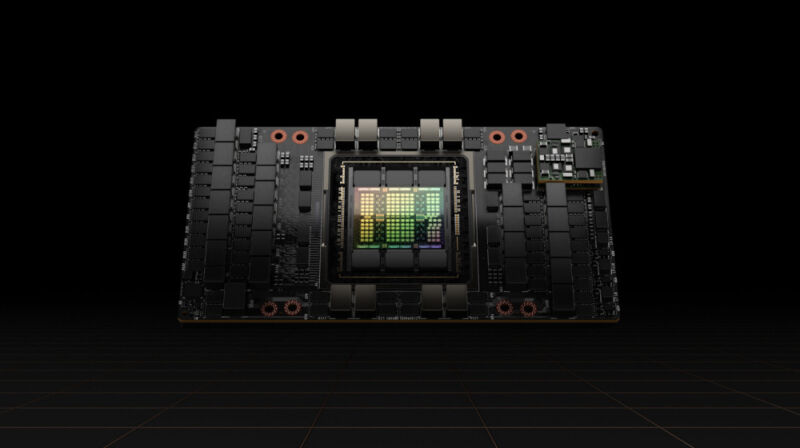

Enlarge / A press photo of the Nvidia H100 Tensor Core GPU. (credit: Nvidia)

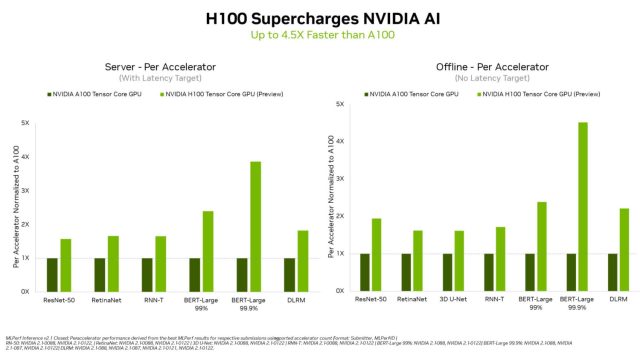

Nvidia announced yesterday that its upcoming H100 “Hopper” Tensor Core GPU set new performance records during its debut in the industry-standard MLPerf benchmarks, delivering results up to 4.5 times faster than the A100, which is currently Nvidia’s fastest production AI chip.

The MPerf benchmarks (technically called “MLPerfTM Inference 2.1“) measure “inference” workloads, which demonstrate how well a chip can apply a previously trained machine learning model to new data. A group of industry firms known as the MLCommons developed the MLPerf benchmarks in 2018 to deliver a standardized metric for conveying machine learning performance to potential customers.

In particular, the H100 did well in the BERT-Large benchmark, which measures natural language-processing performance using the BERT model developed by Google. Nvidia credits this particular result to the Hopper architecture’s Transformer Engine, which specifically accelerates training transformer models. This means that the H100 could accelerate future natural language models similar to OpenAI’s GPT-3, which can compose written works in many different styles and hold conversational chats.