Thermo Fisher Scientific is known for its equipment used in a variety of industries—from biosciences to environmental—but the company is also an application developer. An increasing number of instruments can be remotely monitored and managed through the company’s Connect platform, while data is collected and analyzed through more than 40 different modules and applications.

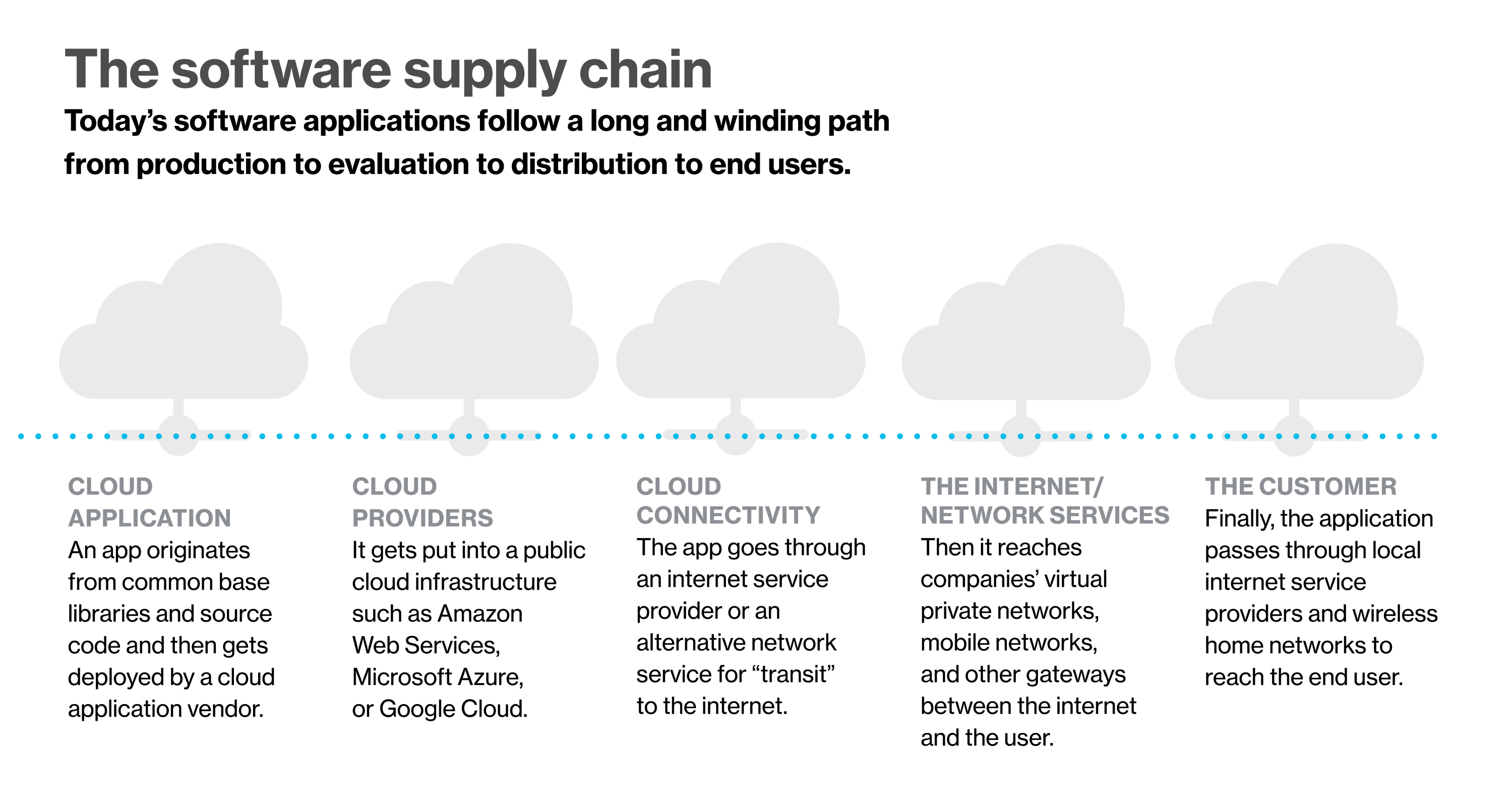

Managing those applications and ensuring end-user satisfaction have become more difficult as modern applications have shifted to rely on a complex software supply chain, involving open-source components, third-party tools, and delivery through cloud services. Peering into the various aspects—from the software running in the cloud or on a server, through the network, and down to the user—is not easy, says Santosh Ravindran, senior information technology manager at Thermo Fisher.

“I can remember those days when everything was hosted in-house and third-party components were installed on our servers, and now it has changed totally,” Ravindran says. “You have these APIs running everywhere for every aspect of the solution stack, so it is becoming increasingly complex to manage today. Everything is now connected to everything else.”

Yet without visibility, companies don’t have complete control over their cloud applications, can’t adapt to performance issues, and can’t gather intelligence on how users interact with the application.

Hard to see: The user experience

As companies move more of their business infrastructure online, the experience of the user—whether an employee or customer—has become the most important metric for performance. When an application runs on a company-owned device, the system has complete visibility into its performance and interactions with users. But cloud applications have many components that aren’t under an organization’s control and might not offer an easy way to gather performance data and information on interactions. “The software supply chain that powers applications will likely only rely more on third-party code and services in the future,” says Vishal Chawla, a principal at PricewaterhouseCoopers (PwC).

“You can monitor a lot of things, but what is most relevant to monitor is to figure out what is going on,” Chawla says. There are many technologies that can help people untangle the mess—application performance monitoring, for one, and CASBs, or cloud access security brokers, “but the question is do you have an end-to-end strategy to have visibility into everything going on, and are you monitoring things that are relevant and not monitoring things that are not relevant?”

In December 2021, the average webpage required 74 requests to different resources to fully load into a desktop browser. Organizations today manage more ways of interacting with applications through APIs, with the average company managing more than 360 APIs. Third-party integrations and the expanding software supply chain have made tracking performance more difficult, with the average software application depending on more than 500 different libraries and components.

Gaining—and keeping—visibility is onerous with the growing number of third parties because they’re outside of a company’s control, which creates blind spots in the application stack. Application performance is important, but gaining insight into the application experience from the user’s point of view has become even more critical.

On improving Thermo Fisher’s application performance, says Ravindran, “We look at our standards and make sure that we capture the learnings from these failures. We do a lot of ‘lessons learned.’ And we typically try to at least keep the lights on for now, and then make sure we handle it differently later.”

Download the full report.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.